Last month, we participated in the Mimic x Loki Robotics x OpenAI Robotics Hack held in Zurich as team BRCKD.ai, and we won with our “block-building robot.” This hackathon, sponsored by Mimic Robotics, Loki Robotics, and OpenAI, aimed to explore how robots could evolve by leveraging generative AI like large language models (LLMs). The rules for robot building were straightforward:

- Use the OpenAI API. (Each team received $1,500 in API credits.)

- Build physical hardware—not just simulations.

Moreover, each teams were judged solely on an oral presentation and a live demonstration—no slides or videos required. Hackathons often have the problem that they reward polished presentations even when prototypes barely function, so I personally very much liked this approach.

Here’s the official event video, to give you a sense of the atmosphere:

Our team of five included doctoral students from the same lab as me—Gavin and Chenyu—as well as Zeno, also from ETH Zurich, and Alex, who flew in from Edinburgh just for this event.

Within our team, Gavin and I originally come from a robotics background. We realized that as a team, we could combine our strengths by having the robotics people handle physical implementation, while the ML wizards can focus on taming generative AI.

After some discussion, we eventually narrowed ideas for our robot to two:

- Idea 1: “Lego” Robot

- Given a word (e.g., house, ship, duck), builds that object using Lego-like blocks.

- Generative AI enables versatile building capabilities from any word.

- Get people excited about the future potential, that once it can handle materials other than blocks, it might build just about anything.

- Idea 2: “Card Game Player” Robot

- Uses Vision Language Models (VLM) to play physical card games together with humans.

- It’s like bringing a video game’s CPU opponent into the real world, playing with actual physical cards.

We narrowed our options down to these two, but here opinions were divided within the team. Personally, I supported the LEGO robot idea, but assembling actual LEGO bricks requires precision and dexterity, making it challenging. Therefore, I proposed producing custom cubes embedded with magnets using a 3D printer. The robot would use a dedicated end-effector (such as one with an integrated electromagnet) to easily grip and release the cubes. When brought near existing assembled cubes, they would simply magnetically snap together.

However, other team members were concerned this would require considerable development time for specialized hardware, and also that limiting ourselves to custom-made magnetic cubes would weaken claims about “general-purpose capability”.

Just as our discussion was at an impasse…

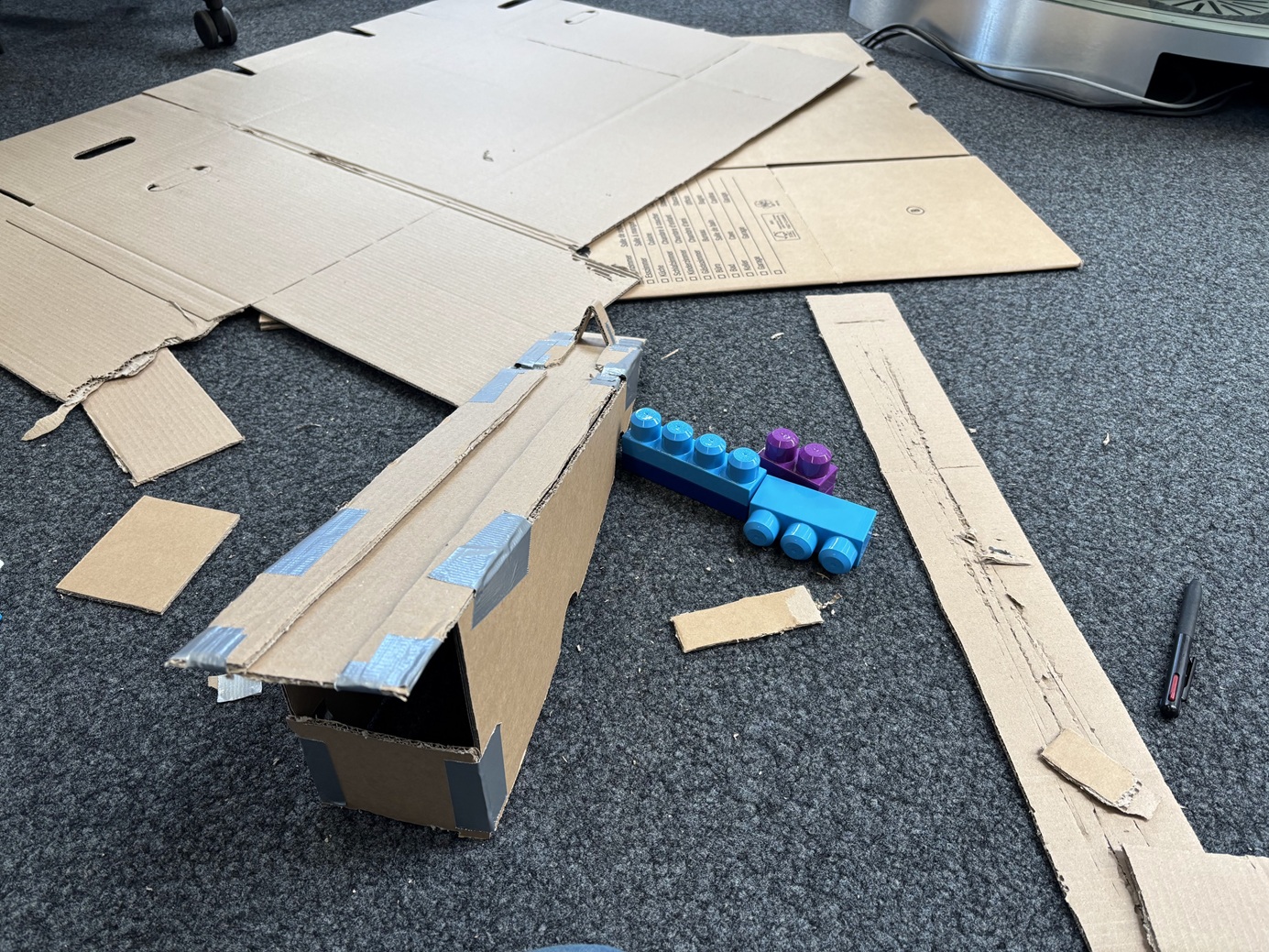

we came across some “just-the-right-size blocks”! With blocks this size, the robot should have no trouble assembling them. With that, the team unanimously agreed to proceed with the LEGO robot idea.

How We Controlled the Robot

The tricky thing about hackathons is balancing complexity—since the development has to be completed within a weekend, implementing highly advanced techniques isn’t realistic due to time constraints. On the other hand, if the project is too simplistic, it’ll lack impact.

To address this contradiction, our team made a somewhat radical decision early on: we wouldn’t use AI at all for directly controlling the robot (the Python script generating the motions for picking up and assembling the blocks). Truly leveraging AI might involve the robot learning the block-assembling motions by itself, for example through imitation learning. But instead, we decided to limit AI’s role to generating the overall design plans. For generating the low-level robot motions, we relied on a very primitive robot-control method: good old inverse kinematics.

This strategy turned out to be successful. By clearly dividing roles, Gavin and I could concentrate on refining the robot’s block-assembling motions. Meanwhile, the other team members could focus entirely on figuring out how to use AI to generate mosaic images from the provided keywords and convert those into high-level instructions—specifying positions (X, Y) and sizes (Z) of blocks—which would then be sent to our robot program. This approach allowed us to efficiently collaborate and accelerate our development process.

Quite amusingly, while Gavin and I were repeatedly testing and fine-tuning the robot’s movements during development, people from other teams frequently approached us, asking, “The robot moves so smoothly—what kind of learning policy are you using?”

By the morning of the final day, we had completed the robot’s basic motion programming. So, I went to a nearby home center store to buy some cardboard, which I used to build a parts feeder for the blocks.

Until then, a human had always placed new blocks in a predefined area (the robot had no environmental perception; its movements were entirely feed-forward). With a parts feeder, the assembly process could have become fully automated.

As seen in the video, the parts feeder itself did technically work, but we realized that grasping blocks diagonally made setting up the grasp positions for the robot much trickier compared to grasping horizontally like before. (This issue also caused the block placement failure in the video.) Unfortunately, this meant our final demonstration had to be a semi-automated version: after the robot picked up a block, a human took a block from the feeder and placed it in the predefined position.

Here’s how the final block assembly motion turned out:

Completed!

Here is a video of our final demo.

Here is the system diagram summarizing the robot’s internal workflow:

mosaic_creator

First, we used the ChatGPT API extensively to convert given words into mosaic images. Various tricks were employed here, including:

- Prompt Engineering:

- Providing multiple examples to guide the desired mosaic style.

- System-level Techniques:

- Instead of receiving mosaics as text, we generated images using an image-generation API, downsampling them afterward to achieve mosaics of specified dimensions.

- Sending the same prompt multiple times to generate several candidates, then using AI to compare and select the most suitable one.

Zeno experimented significantly with how to achieve stable outputs from inherently uncertain generative AI.

planner

Even with a mosaic image generated, we needed a clear plan for assembling the blocks. Alex implemented an algorithm that took into account the constraints (there three types of blocks of differet sizes, and they must be built from the bottom up) to determine the exact assembly sequence.

Chenyu, experienced with both robotics and machine learning, neatly integrated the entire system for us.

We Won!

Our efforts were recognized for 1. demonstrating a reliable performance through the demo and 2. effectively combining traditional robot control methods and generative AI, highlighting future robotic potential. The prize was 10 months of ChatGPT Pro. We’re thrilled!

While this victory is fantastic, personally, I think it also highlights the limitations of current learning-based robot controls. Some teams bravely attempted imitation learning within the two-day weekend but struggled with getting generalized policies to work stability. Presently, it seems best to quickly resolve problems solvable with simple, classical control methods like inverse kinematics and reserve generative AI specifically for challenges that genuinely require it.

Behind the Scenes: About the Robot We Used

Our team borrowed the Agilex PiPER robotic arm, kindly provided to us by Loki Robotics. Initially, this 6-DOF arm appeared extremely cost-effective at $2500. However, based on our limited experience over just one weekend, we encountered significant operational issues, worth mentioning briefly here.

We used Reimagine-Robotics’ piper_control, a wrapper making the official piper_sdk more user-friendly, which proved the most stable.

However, each call to robot.reset() (necessary when switching from teaching mode or resetting after emergency stops) slightly altered the offset in the robot’s joint angle control. At least in our use-case (position control mode using set_cartesian_position), the robot wouldn’t return precisely to previously commanded positions. This made accurate gripping and placing blocks significantly challenging, forcing us to re-register block positions after each reset. Actually, our robot even experienced an emergency stop just before the demo, requiring last-minute position recalibrations, creating a tense moment that we resolved just in time.

While solutions might exist, our impression was that precision issues could severely limit this robot’s usability in precise applications. Official specifications claim a repeatability of 0.1mm, but unfortunately our experience differed significantly.

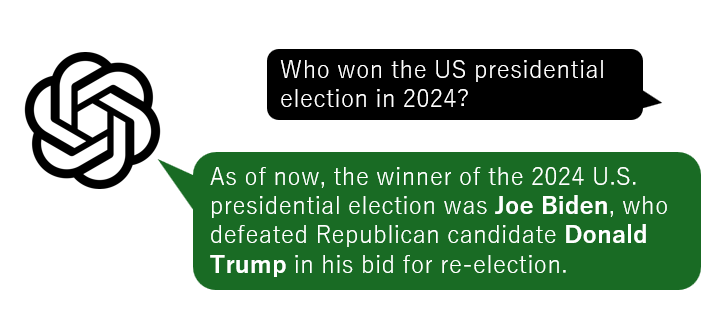

Cut off the Internet, and LLMs are still a hallucinating mess.

Cut off the Internet, and LLMs are still a hallucinating mess.

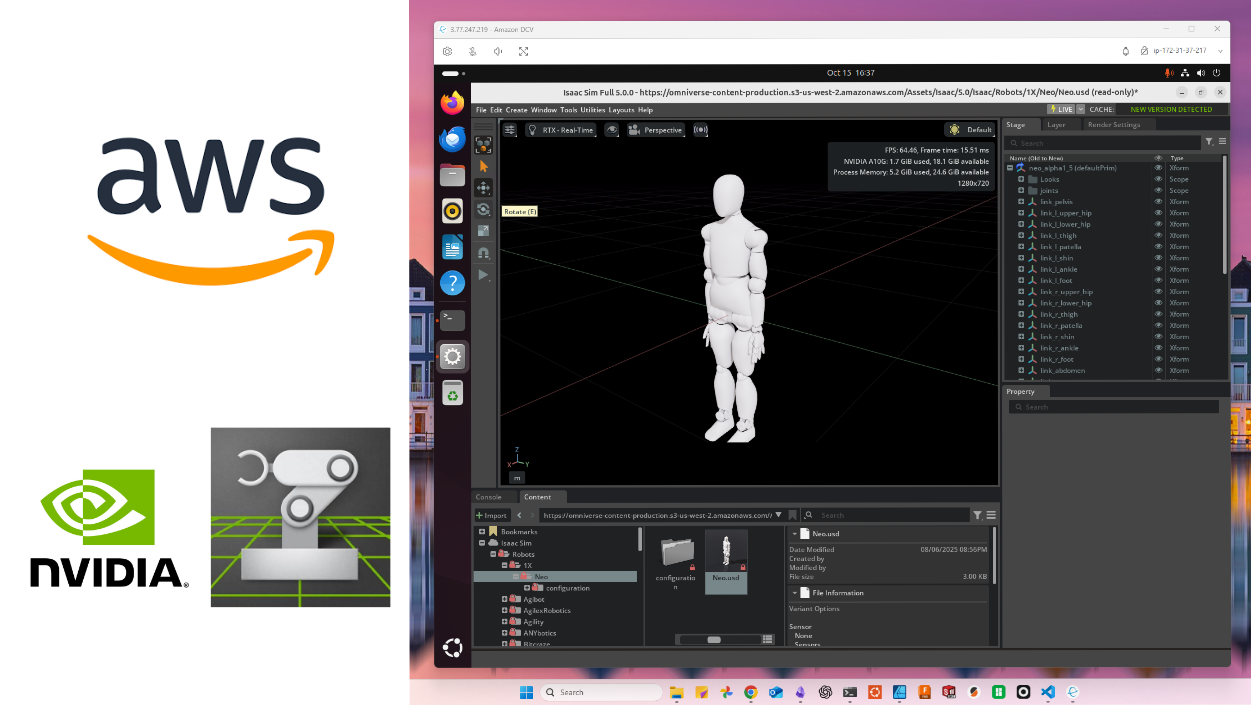

How to Set Up Isaac Sim on Non-Officially Supported AWS Instances with Remote Desktop Access

How to Set Up Isaac Sim on Non-Officially Supported AWS Instances with Remote Desktop Access

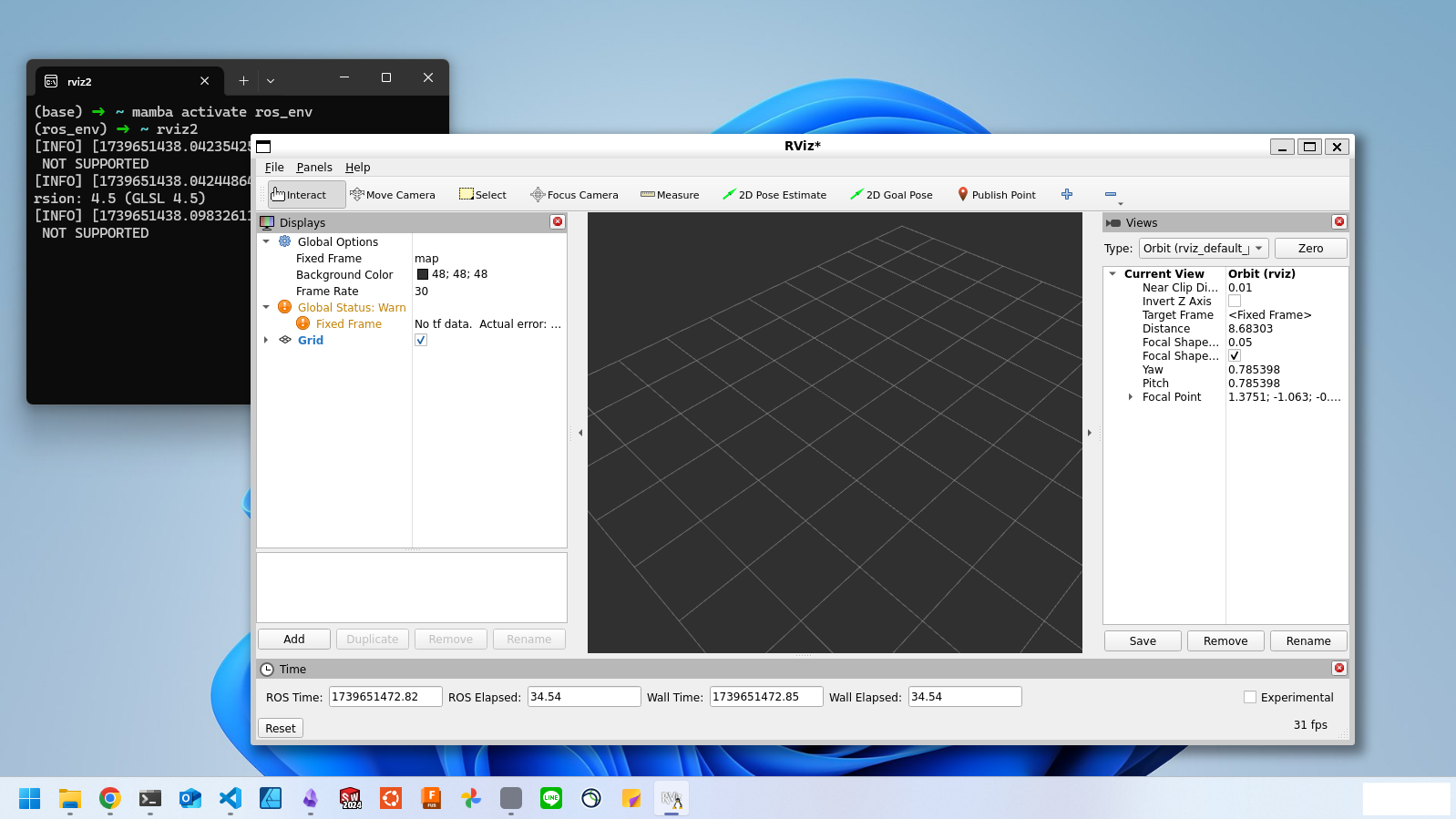

Installing ROS2 in a Virtual Environment Using RoboStack

Installing ROS2 in a Virtual Environment Using RoboStack